WHAT AN NVIDIA DGX SUPERPOD DEPLOYMENT LOOKS LIKE WITH AHEAD

Introduction to an NVIDIA DGX SuperPOD Deployment

NVIDIA® DGX SuperPOD™ reference architecture (RA) is a high-level blueprint for how to build scalable, performant, and reliant AI infrastructure. But, due to the complexity, successful deployment is no easy feat — it requires optimizing designs for the data center site conditions, and multiple IT disciplines must be involved in building the infrastructure. There is also an adjacent BasePOD reference architecture from NVIDIA and in this blog, what we will discuss applies equally to both.

AHEAD has successfully designed and implemented several SuperPODs in the financial and R1 university sectors. Here we’ll discuss what an AI SuperPOD deployment looks like and the benefits of expert design.

Why SuperPOD Design Expertise Matters

Let’s start with some math. Most traditional data center racks are rated for 5 to 10kW per rack, although there is a minority that can handle upwards of 15-20kW per rack. A single NVIDIA DGX™ B200 consumes up to 14.3kW of power. Only being able to deploy one DGX per rack with 15-20kW available is not sustainable or effective. Not to mention that future NVIDIA DGX offerings are likely to require even more power per AI GPU system – up to 120kW for a single rack!

A lot of power discussions for a SuperPOD also overlook the fact that even the 400Gbit Spectrum Ethernet and Quantum InfiniBand (IB) switches consume 1 to 1.7kW of power per switch, and upwards of 36 ethernet and IB switches are needed per SuperPOD. All this power consumption requires a ton of cooling that must be factored into the design as well.

The math adds up to be rather depressing. The fact is that most existing data halls are simply not designed for the BTU/HR cooling loads that a SuperPOD requires. So, hosting a SuperPOD will in most cases require retrofitting a portion of a data center or looking at available co-location data center space. Data center layout, power and cooling considerations, and network and storage considerations all have to factor into the new design. And the devil is in those details. Networks need the fiber cables cleaned, dressed, and installed correctly. You have to choose the right lengths and models for single and splitter MPO cables or transceivers and AOC cables. HPC storage arrays might include their own internal switching and multiple node types.

In short – when a project is that complex, it’s critical to get it all right the first time. And without a partner with multiple areas of expertise, the chances of ROI on a SuperPOD project are quite low.

How Does AHEAD Help Bring This All Together?

AHEAD partners with our clients throughout every step of a SuperPOD or BasePOD™ deployment.

1. Defining Requirements

The first step is to work with our AI experts, NVIDIA, and your team to determine the core elements of your SuperPOD: how much GPU and storage will you need now and in a future state, and what are the networking requirements for both ethernet and InfiniBand to support your environment under RA guidelines?

Each SuperPOD also has advanced cluster management (ACM) servers that must be factored into the rack, power, and cooling design. AHEAD and NVIDIA work with clients to determine their future growth plans to ensure all elements of the network and rack design plan for future expansion without rework.

Once the client, AHEAD, and NVIDIA determine the core SuperPOD design, we consider other customizations like security, data protection, HPC compute that may be needed, as well as the upstream network design. For example, one of our R1 research university clients wanted a ~90 server HPC environment, and AHEAD was able to design it to share the same storage, management, and monitoring as the SuperPOD.

2. Rack Integration

Once the catalog of equipment needed for the client’s AI plans is created, the fun work begins! AHEAD has a team of experts in our Foundry rack integration facility that work with our client’s data center team or co-lo provider to design how every piece of equipment will be racked and cabled in the real-world space. AHEAD can also help select a co-lo provider or discuss changes needed in their data centers to make AI projects work.

New for Foundry in 2025 is a facility exclusively dedicated to advanced AI and HPC rack integration for direct-to-chip liquid cooled racks. Driven by the increasing need for high-power chips and server density, this state-of-the-art facility optimizes integrated racks to support thermal requirements caused by extreme workloads.

Learn more about AHEAD Foundry.

3. Designing the Floor Plan

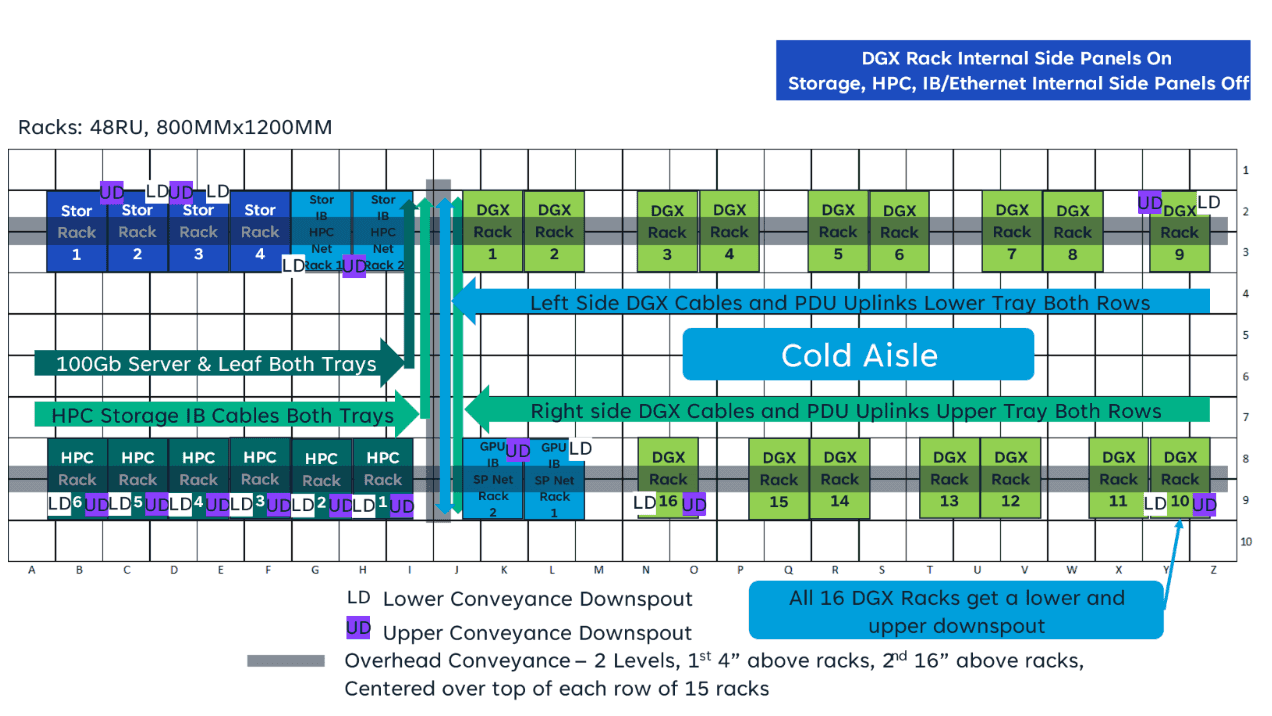

Every space is different, and even though our racks are plug-and-play, we first have to make sure the space is a perfect fit for a custom SuperPOD configuration. The level of detail can feel mundane, as you can see in this floor plan for the R1 university SuperPOD project:

In this example, AHEAD determined 30 racks were necessary – 10 HPC/storage racks and 20 SuperPOD racks. We consulted with the co-lo provider, the client, and NVIDIA’s architecture review board to make sure all the environment’s requirements were thoroughly vetted. Every detail was accounted for — the two 15-rack rows needed an 8’ cold aisle between them, with space between the DGX racks to allow enough CFM from the existing underfloor cooling system to support the SuperPOD cooling needs. The co-lo designed a two-level structured cabling system for resiliency, and AHEAD designed all of the 1,500+ cables in the POD to utilize those two cable trays efficiently. It’s a level of detail that might give the average person a migraine, but we live for this stuff.

4. Rack Elevation and Cabling Plans

Rack elevations and cabling plans are developed in tandem with the floor plan. This is all done prior to the client purchasing the SuperPOD so that all key transceivers and cables from NVIDIA are purchased at the right lengths and at the same time as the networking equipment and DGX. This detailed design extends to all components adjacent to the SuperPOD: storage, HPC servers, security devices, etc. Dialing in all these design details early on greatly reduces the chances of project delays if cables, transceivers, or other small parts aren’t available.

5. Deployment

Once the final SuperPOD design is agreed on, AHEAD Foundry rolls up its sleeves to build and deliver. Building SuperPODs and other NVIDIA AI PODs at Foundry ensures a high level of quality and consistency across projects and offers a high level of customization for preferences such as asset tagging, hostnames, or cable labeling standards.

The Foundry supply chain team optimizes all incoming deliveries to our integration facility and ensures that all SuperPOD components arrive onsite when expected. Meanwhile AHEAD’s Hatch® platform offers the client full visibility into the supply chain for all SuperPOD purchases.

All equipment is tested at Foundry by AHEAD NVIDIA-certified SuperPOD teams, with DOAs remediated and details tracked in Hatch. Once the racks and cabling are complete, each rack is carefully crated and shipped on dedicated trucks to the customer DC or co-lo, where AHEAD on-site personnel install, power, and test every individual rack for equipment or connection issues.

6. Onboarding and Management

When everything is plugged in and tested, AHEAD on-site personnel link up with NVIDIA NVIS team members to continue the logical deployment of the SuperPOD and adjacent equipment.

After physical implementation and deployment, AHEAD can also provide oversight and accountability with ongoing program management for your SuperPOD infrastructure. Our experts in DGX, data science, networking, storage, and security are here to help you optimize your new infrastructure for business value.

Ready to Build Your SuperPOD?

If you’re considering on-premise AI infrastructure, AHEAD can ensure your success with a NVIDIA SuperPOD or any other BasePOD designs. Having an AI infrastructure partner you can trust allows you to concentrate on what applications and innovative business capabilities you want to build. Investing in AI infrastructure is a large financial commitment and it’s imperative that the environment is stood up efficiently and correctly the first time to get the maximum business value.

Leverage our expertise and strategic partnership with NVIDIA to ensure your AI journey is a success by getting in touch with AHEAD at our booth #2203 at NVIDIA GTC 2025.

Learn more about AHEAD AI solutions and our NVIDIA partnership.

About the author

David Smiley

Principal Specialist Solutions Engineer

David is passionate about delivering large scale IT infrastructure projects with customers and specializes in AI deployments.

;

; ;

; ;

;