CAN MACHINE LEARNING & AI PREDICT A WINNING MARCH MADNESS BRACKET?

Contributing Author: Ryan McKiernan, Associate Technical Consultant

For many sports fans, March only means one thing: The NCAA Tournament is here. March Madness is among the most exciting events in all of sports, with the best 64 men’s and women’s collegiate basketball teams coming together to square off in a single-elimination event that is packed with chaos. A significant component of the thrill is the popular tradition of creating brackets to select winners for each game of the tournament with the goal of picking a perfect bracket. But it’s easier said than done – in fact, no one has ever correctly picked all 67 games, and only a small handful have even made it out of the first weekend without missing a pick.

When it comes to completing a bracket, there are many approaches that fans swear by. Some get crazy with the statistics, others opt for the mascots they like the most, and some simply trust their gut. With the recent rise in machine learning and artificial intelligence (AI) capabilities and accessibility, many wonder if these technologies can do what no one else has done before by picking a 100% accurate March Madness bracket. With that in mind, we set out to build a model that can correctly predict as many games as possible.

To recap what that process looked like, some of the key steps included:

- Finding quality data sources with valued inputs

- Cleaning/formatting data such that it can be properly ingested

- Performing a training/validation split to prevent overfitting

- Testing out different types of models for the highest accuracy

- Fixing hyperparameters that can be adjusted to help us improve a type of model

Below, we’ll walk through the steps we’re using to complete our 2024 bracket in an effort to demonstrate some of the forecasting and data analysis capabilities of machine learning and AI that are transforming the way organizations do business.

Data Collection & Cleansing

The first step in the journey toward creating a successful machine learning algorithm is figuring out what we want to train on. Knowing that we’re trying to classify whether a team will win a game in the tournament, we ended up using data from KenPom, which has an abundance of both team characteristics and in-game metrics. From there, we needed to clean our data and format it in such a way that it can be properly ingested. This includes getting rid of unnecessary columns and performing a training/validation split so that we don’t overfit on our data.

Model Building & Exploration

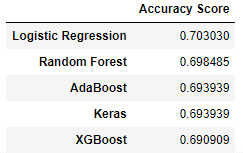

The next task is figuring out which model will be optimal for our dataset. There are all sorts of classification models that range in complexity – from simpler ones such as logistic regression to more complex structures like neural networks. Within some of these models, we have what are called ‘hyperparameters,’ which are adjustable and provide a flexible way to train and optimize our accuracy. To try out all these different possibilities, we can perform a grid search, which will test model accuracy on multiple models/hyperparameters to find the optimal combination. When we do that, we can identify the one that will fit best:

So, does this mean that we’re ready to test the model on this year’s bracket? Not quite.

Feature Specification

Part of devising a solution in data science and machine learning is being able to examine why something isn’t being predicted correctly and how we can extract value from certain scenarios. While machine learning algorithms can certainly decipher things we don’t already know, they don’t know everything (at least not yet). It’s easy to look at the presumably accurate results and say “that’s the answer,” but sometimes we need to look at things from a different angle, especially for events that are inherently uncertain.

For example, we can look at the results of last year’s predictions and say, “that’s the optimal bracket,” but does the higher seed winning almost every game due to their better “power rating” turn out to be the story of a tournament? Not typically. Frequently, we’ll see some shocking upsets in the first round, or a ‘Cinderella’ team make a deep run. So how do we decipher those teams and situations if, on the surface, they aren’t better than their opponent? Those are the kind of questions we want to not only ask but explore more in depth.

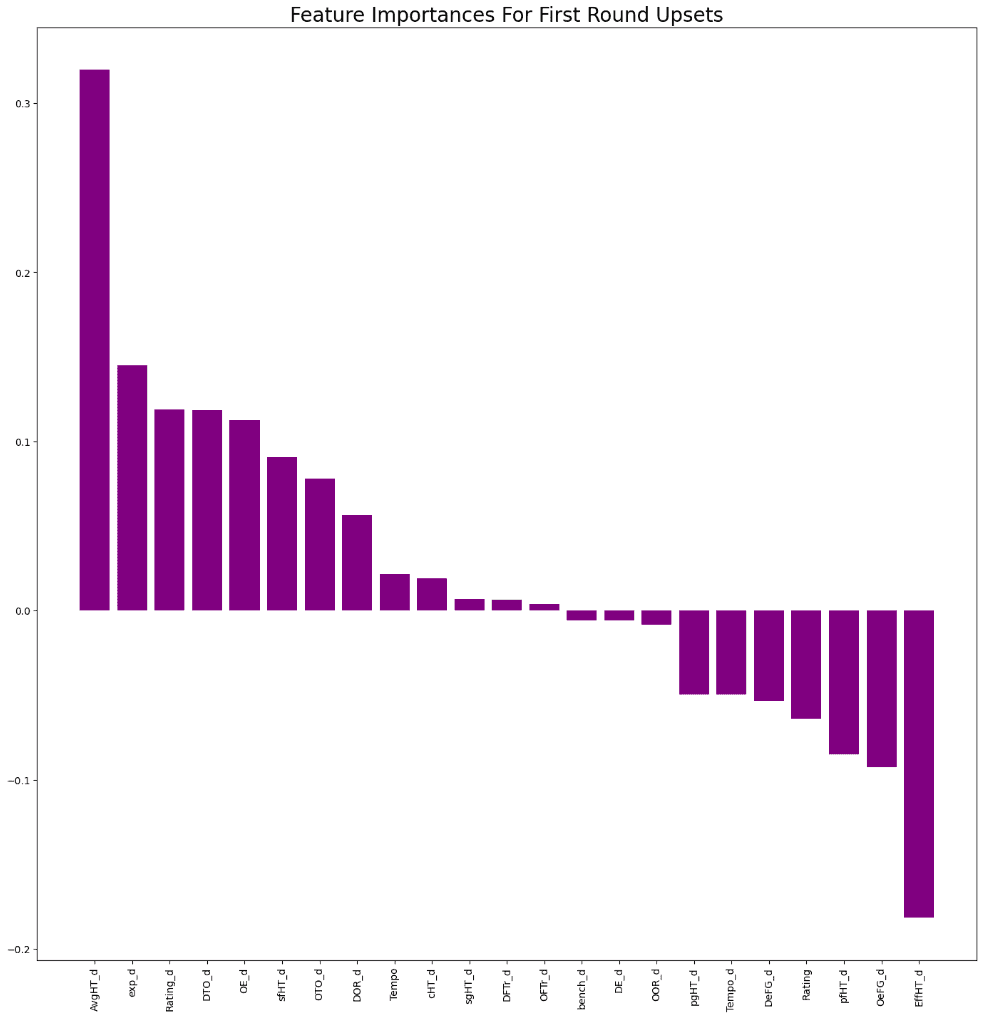

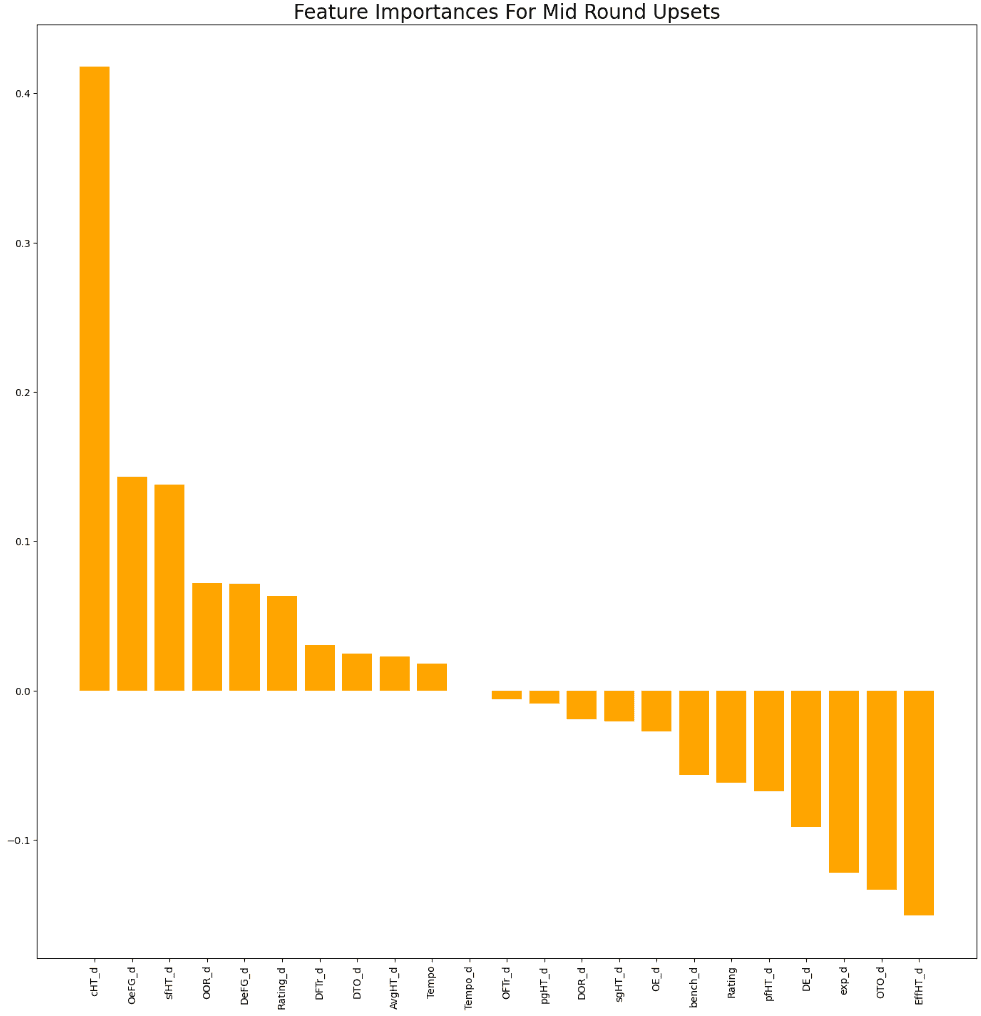

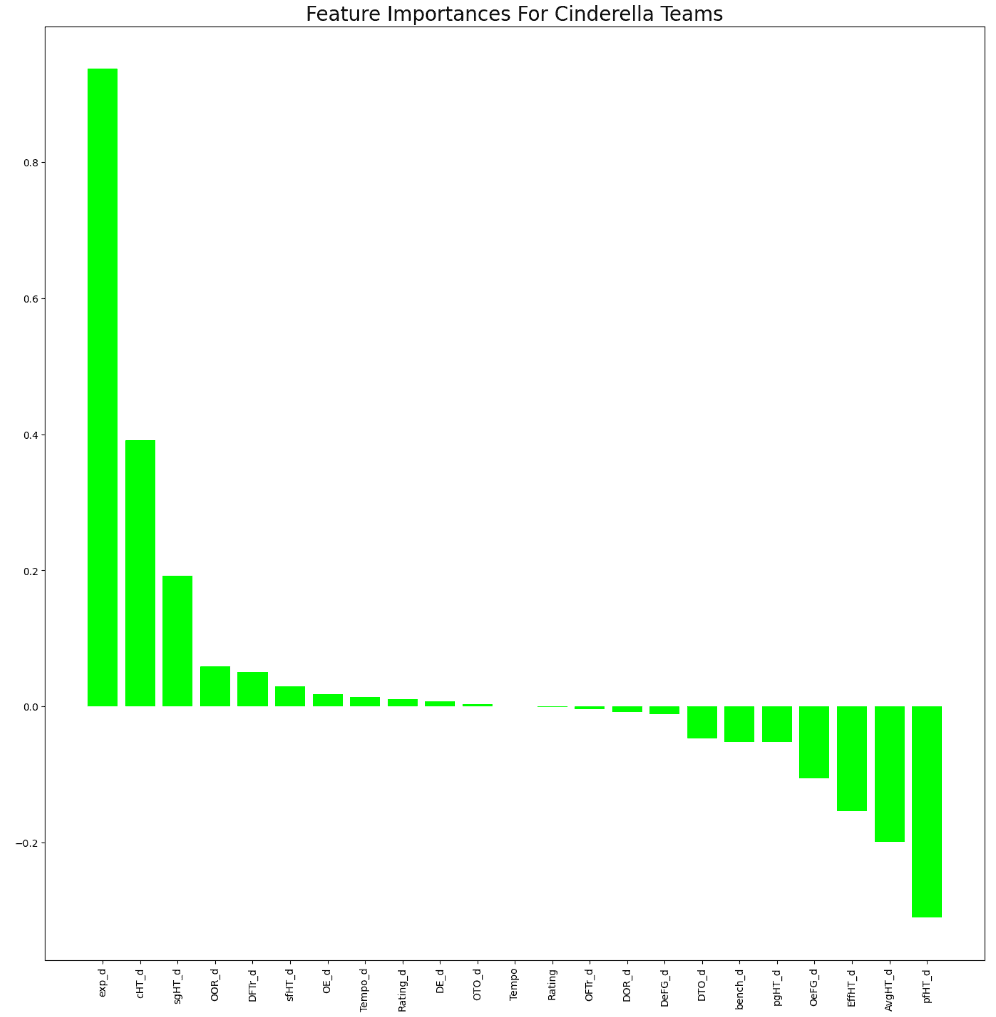

To answer some of these questions, we need to focus on the subsets of the teams/games from our data that match these scenarios. Once defined, we can create what is referred to as a feature importance chart, which shows the weight that certain factors carry in predicting a positive outcome for that scenario. Even finding just one metric that stands out could be enough to make a pick that spices up our bracket. For the examples below, we’ll examine the top metrics, some teams to look out for, and a game or two where targeting the underdog looks promising.

The First Round Upset

Defined as a team seeded 11 or lower winning their first game in the tournament.

Key Metric(s): “DTO_d” (the rate at which a defense forces turnovers), experience, avg. height

Teams of Interest: McNeese State, Samford, James Madison

Game(s) to pick: Samford over Kansas

The Mid Round Upset

Defined as a team seeded 5 or lower upsetting a team seeded 4 or higher in either the round of 32 or Sweet 16.

Key Metric(s): “oEFG” (offense effective FG%), height of center

Teams of Interest: Gonzaga, Dayton, Florida, Drake, BYU, Wisconsin, FL Atlantic

Game(s) to pick: BYU over Illinois

The Cinderella

Defined as a team seeded 5 or lower getting to at least the Elite 8.

Key Metric(s): Experience

Teams of Interest: TCU, Texas, Nevada, South Carolina, Clemson, Mississippi State, NC State

Team(s) to make deep tournament run: Mississippi State

Final Four and Champion

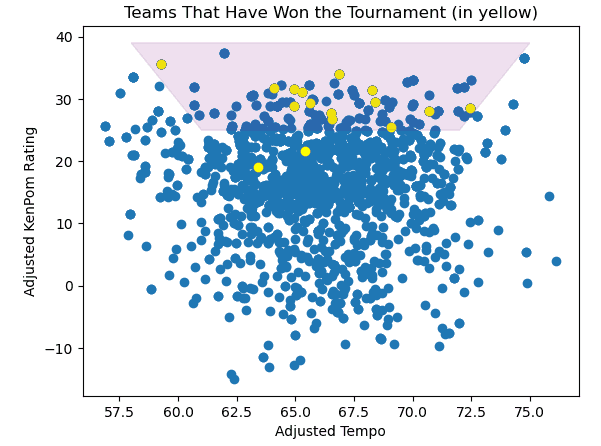

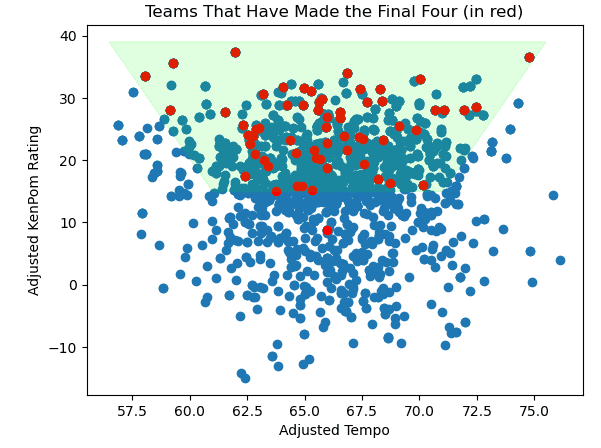

While the scenarios above are important, the most important thing in a good bracket is correctly identifying a champion (and Final Four picks). For this, we’re going to look at some scatter plots of all the teams in the past 16 tournaments who have made it that far, plotted by their KenPom rating and game tempo. In addition to that, we’ll display where the champions can be found (yellow) and the final four teams (red).

If we look at where these points are, we notice that most fall inside a distinct region. This is on par with the “Trapezoid of Excellence” theory that dictates whether or not a team can win the whole thing.

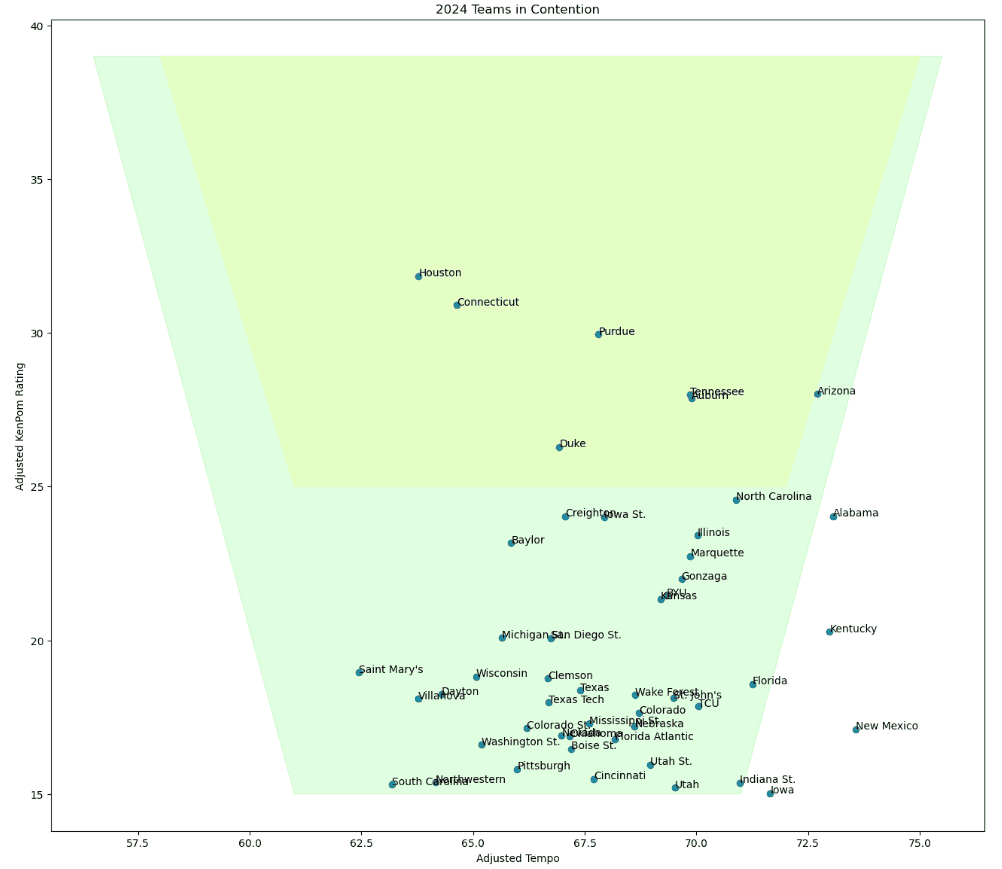

If we apply this on teams for this year, we find that while it doesn’t help to narrow down teams to make the final four, it does provide us with 6 realistic champions: Houston, UConn, Purdue, Tennessee, Auburn, and Duke. It also picks out a few teams, such as Alabama and Kentucky, who play at too fast of a pace to be consistent enough to make a deep run.

Conclusion & 2024 Bracket

Machine learning is an incredibly powerful tool that will continue to play a significant role in society – well beyond predicting the winners in a basketball tournament. But it doesn’t mean that it will always have the best answer to our questions. Thus, it’s important that we can properly interpret and conclude findings from these models in a way that optimizes our objectives, whether that’s building a bracket or delivering enterprise technology solutions for a customer.

Check out the complete 2024 bracket below.

;

; ;

; ;

;